The VE180 is a good choice for indoor people tracking and outdoor tracking applications. The VE180 is a good choice for indoor people tracking applications.

This video engine can be used for applications such as people counting and dwell time, and typical deployments include commercial buildings. The following sections detail ideal settings only. Configuration options described here are not mandatory.

The VE180 is a robust analytic. Use the VE180 engine (over the VE150 or VE250) in situations where people are crossing paths, or walking together, and you want to track them individually.

![]()

Setup |

Ideal Conditions |

Environment |

• Away from moving objects such as elevator doors, or moved objects such as carts, carriages and large luggage. • Avoid camera placement near escalators or stairs. Ensure that people are tracked on flat and level surfaces for accurate detection. • Ensure that people are fully visible to the camera and not partially hidden by shelves or low walls. |

Lighting |

• At least 75 LUX or better. • Ensure there is consistent light throughout the space and throughout the period of time that people are tracked. • Ensure that there are no shiny objects, like a shiny floor in the area people are tracked. A shiny floor may reflect light/shadows from surrounding area and generate false counts. • In the indoor tracking area, if possible, avoid sunlight from outside. Sunlight occasionally obscured by moving clouds will cast shadows that cross a counting line. • Depending on the angle of an indoor light, the shadows of people walking by (but not through) a counting line can trigger false counts. |

Counting Lines |

• An overhead view is usually more accurate for counting people across a line, but it covers less ground than a 45-degree view if the ceiling is low. • Try to have at least 1 meter of floor space on either side of the counting line. • In angled views, try to have at least 2 meters of space on either side of the counting line. • Setting person size: A person crossing the line should not take up more than about 1/3 of the view in width and no more than 1/2 of the view in height. |

Camera Placement |

• Note that accuracy decreases for nearly horizontal views (for example, less than 20 degrees declination). • If the camera is placed such that people appear smaller than 20 pixels on the screen, counting accuracy will decrease and you will need to use advanced option settings. |

Task 1: Configure VE180

To configure the VE180:

1. In the Client, from the Server menu, select Configuration. The Configuration dialog box opens. This allows you to configure devices for the currently selected server.

2 In the left pane, click Devices. The Devices dialog box opens.

3 Click the camera you want to configure for use with video analytics and click Edit.

4 Click the Analytics Engines tab. By default, the VE250 analytic is selected automatically. Clear the VE250 option and select VE180 – Motion Tracking.

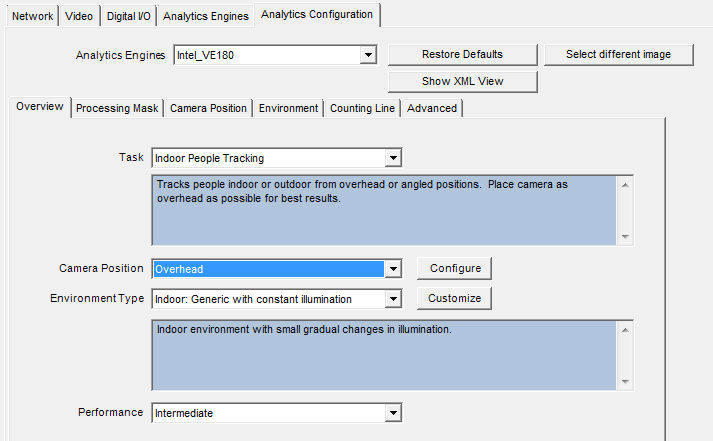

5 Click the Analytics Configuration tab. From the Analytics Engines drop-down list, select Intel_VE180.

6 On the Overview subtab, Click the Select Different Image button to select an image from your camera on which to base your analytic configuration.

7 The following subtabs contain options to configure your analytic.

Select and configure the task you want the analytic to perform as follows:

For Indoor People Tracking:

When you select the Indoor People Tracking task, the Multi-Layer Hybrid Background Model is selected by default on the Environment subtab. See the Environment subtab section for further details on available configuration options.

|

For Outdoor Multi-Class Object Tracking

Uncalibrated - Use only if objects are well separated and accurate counts are not required. 3 From the Environment Type drop-down list, select one of the following. Outdoor: Generic Outdoor: Water Outdoor: Thermal camera Custom - Automatically displayed if settings have been changed from default values in the Environment tab or Advanced settings. 4 From the Performance drop-down list, select the level of CPU processing: Custom, Low, Intermediate, High. By default, Intermediate is selected.

When you select the Outdoor Multi-Class Object Tracking task, the Outdoor Background Model is selected by default on the Environment subtab. See the Environment subtab section for further details on available configuration options.

|

For Face Tracking From the Task drop-down list, select Face Tracking. No additional options are available on the Overview subtab. See the sections detailing the Processing Mask subtab, Counting Line subtab, and Advanced subtab configuration options. |

For supported devices, automatic calibration determines the optimal camera configuration for scenes containing people. You can switch to manual calibration at any time, and the system retains the last best estimation of camera position detected. The Auto Calibration option is available in Server Configuration > Devices > Analytics Configuration > Overview > Camera Position

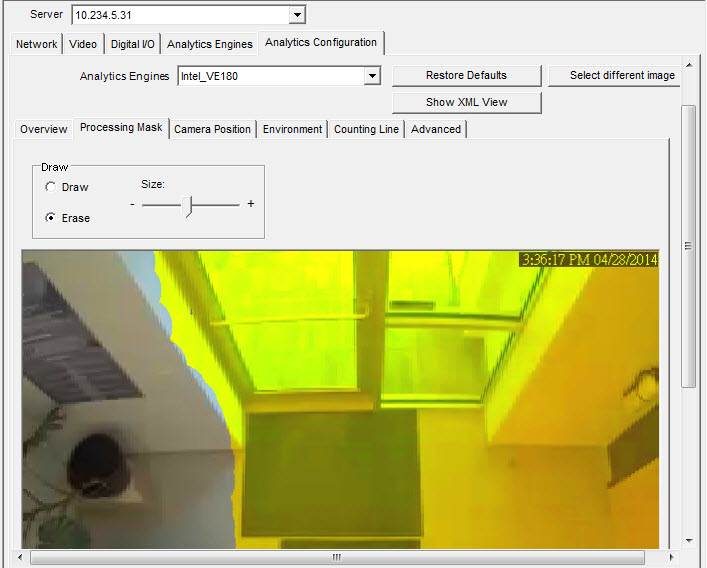

1. Using the displayed image from the video, define the area in yellow (a mask) where objects in motion should be detected. (See the topic Masks and Common Settings for details)

• Select the Erase option to erase the yellow mask.

• Select the Draw option to draw the yellow mask.

• The Size slider adjusts the pen thickness.

Option/Design |

Description |

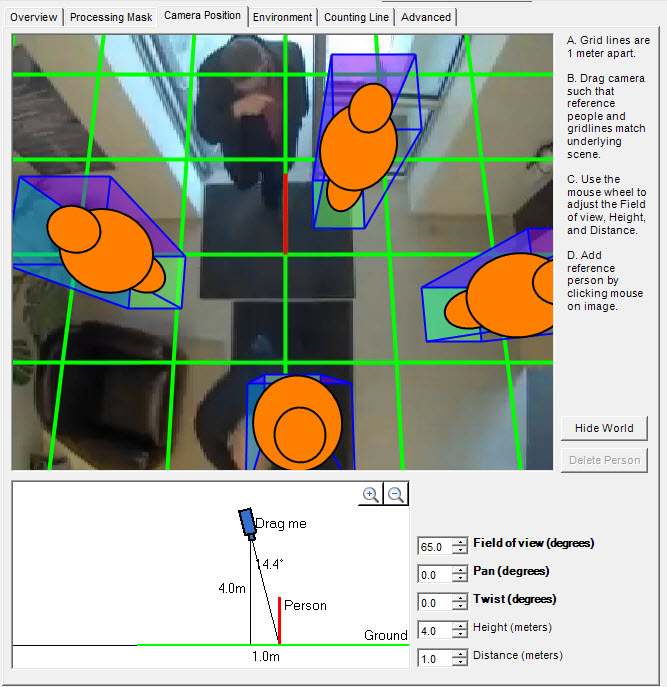

Grid |

Grid lines are 1 meter apart. Automatically displayed over scene. |

Reference person icon |

An orange colored form representing person size and position in an image. Four populate the image automatically. Move the reference people icons to various positions in the image, foreground and background as they would naturally occur. To add more reference person icons, click your mouse on the image. |

Delete Person button |

Removes a reference person icon. Click the reference person icon and then click the Delete Person button. |

Hide/Show World button |

Hides (or displays) the grid lines and reference person icons so that you can view the actual scene in the image. |

Interactive section |

Allows you to adjust the grid and reference person size to match the underlying scene. Using your mouse, you drag the camera icon up and down and left and right. |

+/- magnification icons |

Allow you to zoom in and out in the interactive section |

Field of view (FOV) |

Horizontal field of view.

If you increase the value, you must drag the camera icon closer (down) to get the correct person size. If you decrease the value, you must drag the camera icon further away for the same rough size. If you obtain the exact camera position by measuring the actual height and distance, then you must adjust Field of view to make the reference people icons the right size in the scene.

Mouse wheel perspective control Allows you to control the amount of perspective in the scene without changing the overall scale. (Allows you to make a person icon a certain size while changing the Field of view so that the tilt of the other people icons and their relative size is correct.)

Position the mouse over the little diagram. Rotate the mouse wheel to increase or decrease the Field of view in 2.5 degrees increments, creating an opposite proportional change in the ground distance parameter.

Example: Ground distance is 10.0 meters and the Field of view is 60 degrees. One mouse wheel click changes Field of view to 62.5 degrees and ground distance to (60/62.5)*10.0 = 9.6 m. A mouse wheel click in the other direction changes Field of view to 57.5 degrees and ground distance to (60/57.5)*10.0 = 10.43 m. |

Pan |

Movement of the camera from left to right or right to left around the imaginary vertical axis that runs through the camera.

When the camera is pointed at the ground, it basically tilts the ground plane. When the camera is closer to horizontal, it looks more like a rotation of the ground plane.

Here Pan tilts the grid against the ground plane, which is incorrect based on the actual scene.

Most useful in an near-overhead view when the camera is pointing off to the side (that is, the deflection from vertical is not completely on the camera view’s up-down axis) |

Twist |

Rotation of the camera around the axis that extends directly out of the camera through the center of the view.

Useful in an angled view when the camera is twisted a bit so that a vertical line in the world does not correspond to up-down in the image. Also useful if the ground in the image appears to tilt a bit from left to right |

Height and Distance fields |

Values correspond to the movements of the camera icon in the interactive section. |

Warning message |

Symphony displays a warning message if the camera angle does not agree with Overhead selection |

When you select the Indoor People Tracking task, the Multi-Layer Hybrid Background Model is selected by default on the Environment subtab. This is the recommended background model for most indoor applications. Depending on the environment, you may need to modify the following Multi-Layer Hybrid Background Model configuration options to optimize analytics results.

Common

Option |

Description |

Background learning duration (seconds) |

Determines the approximate length of time it will take a foreground object that becomes stationary to merge into the background. Important: If an object has periodic movement with a period greater than the specified time, then that object will always be considered a foreground object (never merged into the background). The background is updated all the time, not only when the analytic engine starts. |

Background Sensitivity |

Sets the minimum background sensitivity needed for an object to be detected as a foreground object. Important: If set to a low value, then objects with similar color as the background will NOT be detected as valid objects to track. |

Learn normal behavior |

Learns the percentage of time each pixel is in motion and can subsequently ignore. Useful, for example, when tracking boats on water. Disabled by default. |

Option |

Description |

Method |

• % of screen change: Monitors the percentage of the scene that is considered foreground. If the amount of foreground is too high, model relearning is triggered. • Brightness change: Monitors the scene for abrupt, overall changes in brightness (perhaps caused by a change in lighting or weather event). • Spectrum change: Monitors the scene for abrupt, overall changes in brightness, but less sensitive to local changes (such as a person in a dark coat walking by). • Combined change: A combination of % of screen change and Spectrum change. |

Sensitivity |

Value range between 0 and 100. A high sensitivity means the relearning is easily triggered. For example, 80 sensitivity will trigger when just 20% of the screen is foreground, while a low sensitivity triggers relearning only in an extreme situation. |

Time to wait before resuming tracking (seconds) |

Allows you to set how long to wait before resuming to track objects. Ideally this value should be zero, but if you are aware of a lighting issue where tracking immediately can cause false alarms, then set this to the number of seconds needed for the light to stabilize before tracking starts. |

Option |

Description |

Shadow/Illumination Removal |

Disabled by default. |

Strength |

A high sensitivity level setting is helpful in cases where you have a very strong dark shadow. Disabled by default. A low sensitivity level setting is help in cases where you have a light shadow. Example: 0.1 = a light shadow 1.0 = a very strong dark shadow |

Additional background models and configuration options

are available for situations where the default indoor settings are not

sufficient for your needs.

Aimetis Knowledge

Base article #10305 for details on additional VE180 Indoor People Tracking

configuration options

When

you select the Outdoor Multi-Class

Object Tracking task, the Outdoor

Background Model is selected by default on the Environment subtab.

Depending

on the environment, you may need to modify some of the background model

configuration options to optimize analytics results.

Option |

Description |

Snow Detection |

Enable this option to configure the background model to detect and track in areas covered by snow. Disabled by default. |

Option |

Description |

Detect Small Objects |

Enable this option to configure the background model to detect and track the smaller-scale objects. For example, an office tower camera that covers a large pedestrian traffic area would contain uniformly small objects in the camera view. |

Option |

Description |

Simple |

Enabled by default. Uses less CPU than Complex. Works for most situations. |

Complex |

In cases where there are strong shadows, Complex removes more shadows, but uses more CPU. |

Strength |

Defines how aggressively to remove shadows. • Increasing the strength value can result in an increase in potential objects being missed. • Decreasing the strength value can result in shadows being tracked as objects. |

Option |

Description |

Track Slow Moving Objects |

Tracks an object moving very slowly across the video, or an object coming directly toward or moving directly away from the camera (which will appear to be moving slowly). Disabled by default. When disabled, slow moving objects tend to become part of the background and will not be tracked. When enabled, slow moving objects will be tracked. Important: This option might increase some false alarms for situations where an object stands still in the video for a long time. |

Object Speed |

Categories the speed of the tracked object. |

Option |

Description |

Object Contrast |

Controls how sensitive the background model is to perceived similarities between the background and objects. If an object in the camera view appears similar to the background (such as a dark background and a person in dark clothing), increasing the object contrast threshold increases the model’s ability to detect the object against the dark background. |

Option |

Description |

Method |

• % of screen change: Monitors the percentage of the scene that is considered foreground. If the amount of foreground is too high, model relearning is triggered. • Brightness change: Monitors the scene for abrupt, overall changes in brightness (perhaps caused by a change in lighting or weather event). • Spectrum change: Monitors the scene for abrupt, overall changes in brightness, but less sensitive to local changes (such as a person in a dark coat walking by). • Combined change: A combination of % of screen change and Spectrum change. |

Sensitivity |

Value range between 0 and 100. A high sensitivity means the relearning is easily triggered. For example, 80 sensitivity will trigger when just 20% of the screen is foreground, while a low sensitivity triggers relearning only in an extreme situation. |

Time to wait before resuming tracking (seconds) |

Allows you to set how long to wait before resuming to track objects. Ideally this value should be zero, but if you are aware of a lighting issue where tracking immediately can cause false alarms, then set this to the number of seconds needed for the light to stabilize before tracking starts. |

Additional configuration options are available for situations

where the default outdoor settings are not sufficient for your needs.

Aimetis Knowledge

Base article #10306 for details on additional VE180 Outdoor People Tracking

configuration options

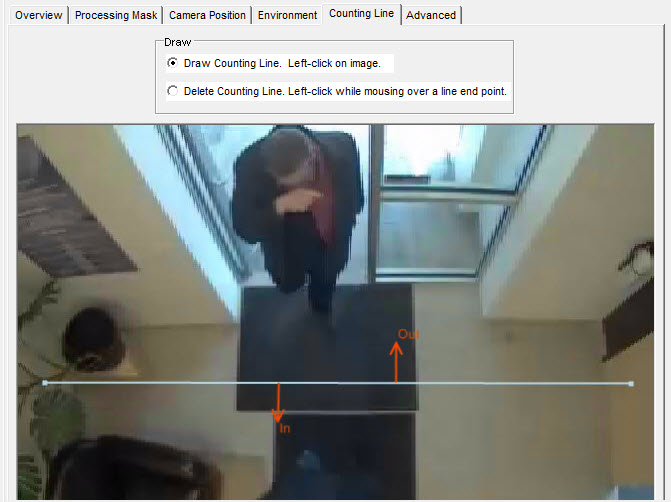

Click this subtab if you want to use the analytic as a people counter.

1. Ensure the Camera Position and Motion Analysis mask is set properly and then click the Counting Line subtab.

2. By default, the Draw Counting Line option is selected.

3. Left-click on the image and draw a line that will cause items (people) to be counted as they pass the delineated area.

• The line appears with arrows indicating when movement is detected as In to a delineated area and Out of the delineated area.

• To reverse the In-Out orientation of the line, draw the line in the opposite direction. (Delete the original line first.)

4. Click OK to save settings.

Depending on the environment, you may need to modify the following background model configuration options to optimize analytics results, as follows:

Option |

Description |

Analysis FPS |

Indicates how many frames will be analyzed by the system. Typically the default value should not be altered. However, in many cases the analysis frame rate can be reduced to conserve CPU. Doing this may negatively alter the algorithm’s performance. |

Capture Resolution |

Displays the resolution at which the video is being recorded. This can be changed in the Device setup. |

Analysis Resolution |

Setting the Analysis Resolution the same as the Capture Resolution ensures that objects will be detected as far away as possible. However, in order to conserve CPU resources, it is possible to down sample the video sent for analysis. Downsampling will reduce CPU requirements but may also reduce the detection range of the video analytic. |

The majority of the Advanced subtab settings are for experts only. Values should be changed only in cases where the analytic engine is not functioning as expected.

For VE180 Indoor People Tracking, see Aimetis Knowledge Base article #10305 for details on additional VE180 Indoor People Tracking configuration options.

For VE180 Outdoor Object Tracking, see Aimetis Knowledge Base article #10306 for details on additional VE180 Outdoor People Tracking configuration options.

Task 2: Create a Rule using VE180

To configure a Rule using the VE180:

1. You must configure the VE180 before creating a Rule that uses the VE180.

2. From the Server menu, select Configuration and then Rules.

3. Click New. The Rule Wizard opens. Read the overview and click Next.

4. Select the check box next to the camera name. If it is a PTZ camera, select the camera tour position check box.

5. From the Select video engine for those running on camera drop-down list, select VE180.

6. For a digital fence, select the Use Fences option:

a. Select the Any Fence option and draw a line over the image with your mouse. Arrows, which are automatically displayed when you draw a line, define which direction the offending object must pass through in order to cause an alarm.

b. With the Any Fence option selected, click the arrows to change their properties.

• Red indicates that an object crossing the line in the arrow direction will cause an alarm.

• The green arrow denotes that an object crossing the line in the arrow direction will NOT cause an alarm.

• Multiple fence lines can be drawn.

If All fences has been selected, the object must cross through all fences drawn to cause the alarm. |

• To delete a line, select the Erase Line option and left-click on the endpoint of the line you want to delete in the image.

7. To designate an alarm zone, select the Use Mask option.

• Select the Erase option to erase the red mask.

• Select the Draw option to draw the red mask.

• The Size slider adjusts the pen thickness.

The following settings apply only to alarm zones:

• Any part of track denotes the object can be anywhere in the red alarm zone to trigger an alarm. To alarm on objects moving anywhere in the alarm zone, select Any part of track.

• End of track denotes that the object has stopped in the alarm zone. To alarm on objects entering the alarm zone and stopping in it, select End of track.

• Beginning of track denotes that the object started moving in the alarm zone. To alarm on objects that were in the alarm zone and started to move, select Beginning of track.

• Any part of object denotes that any part of the object can be anywhere in the red alarm zone to trigger an alarm.

8. Click Next to continue to next step in wizard.